The UX of AI

- by R27

- in AI. Blog Design Approach Science Visual Language

- posted February 6, 2018

Below is the first two paragraphs, read the whole article via the link below.

Using Google Clips to understand how a human-centered design process elevates artificial intelligence

By Josh Lovejoy

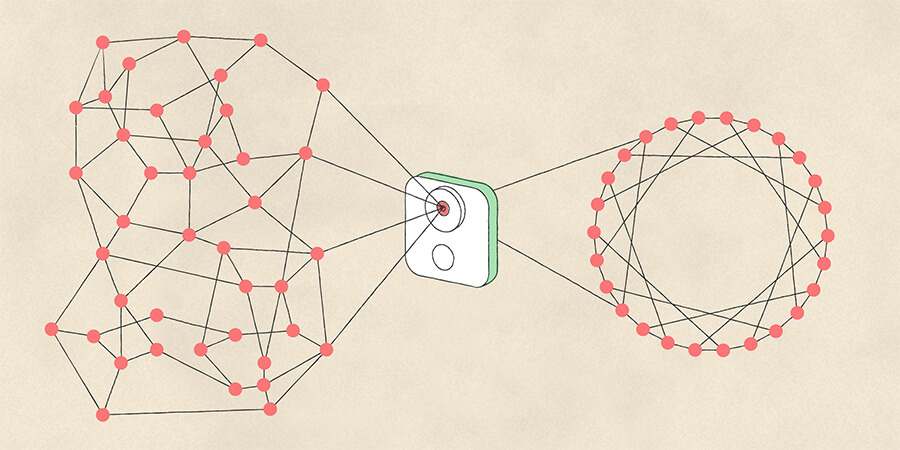

As was the case with the mobile revolution, and the web before that, machine learning will cause us to rethink, restructure, and reconsider what’s possible in virtually every experience we build. In the Google UX community, we’ve started an effort called “human-centered machine learning” to help focus and guide that conversation. Using this lens, we look across products to see how machine learning (ML) can stay grounded in human needs while solving for them—in ways that are uniquely possible through ML. Our team at Google works across the company to bring UXers up to speed on core ML concepts, understand how to best integrate ML into the UX utility belt, and ensure we’re building ML and AI in inclusive ways.

Google Clips is an intelligent camera designed to capture candid moments of familiar people and pets. It uses completely on-device machine intelligence to learn to only focus on the people you spend time with, as well as to understand what makes for a beautiful and memorable photograph. Using Google Clips as a case study, we’ll walk through the core takeaways after three years of building the on-device models, industrial design, and user interface—including what it means in practice to take a human-centered approach to designing an AI-powered product.

The UX of AI – read the full article here